One of the exciting new features in SAS 9.3 (well for me anyway) is the ability to replace or modify the logging configuration on-the-fly for a running server without needing to restart it. Previously in SAS 9.2 you could dynamically change the levels for loggers on servers (via SAS Management Console Server Manager Loggers tabs) but not modify the appender configuration. Now in SAS 9.3 you can completely change the logging configuration file and apply it without restarting the server. This is exciting because it means you can do something like add a new appender for a new custom log file for the metadata server in the middle of the day with out having to restart the entire platform of dependant services. That’s really neat!

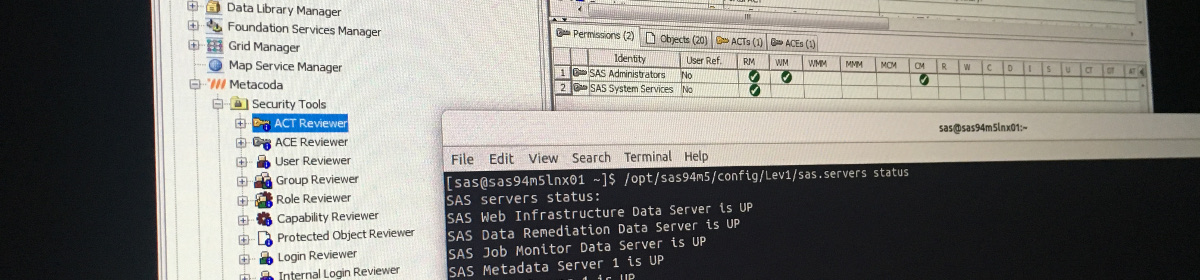

I tried it out. I made a small change to the metadata server logconfig.xml, saved it, and then waited … watching the log file. I’d thought that maybe the servers had a watchdog thread to monitor changes to the log config file (a bit like the log4j’s configureAndWatch) but after watching and waiting for a little while nothing happened. I then checked the documentation (always a good idea) and found that you actually need to prod the server to tell it to change its logging config via proc iomoperate (also new in SAS 9.3).

This is the code I used to tell the SAS Metadata Server to reload its logging configuration file (logconfig.xml) – the one I had changed.

proc iomoperate;

connect host='sas93lnx01' port=8561 user='sasadm@saspw' password='thesecretpassword';

set log cfg="/opt/ebiedieg/Lev1/SASMeta/MetadataServer/logconfig.xml";

quit;

It worked. The metadata server immediately closed it’s current log file (SASMeta_MetadataServer_2012-01-10_sas93lnx01_1266.log) and opened a new one with a _0 suffix (SASMeta_MetadataServer_2012-01-10_sas93lnx01_1266.log_0) using the modified config. I wondered why it had added the _0 suffix? Subsequent executions increase the log file suffix (_1, _2, etc.). Perhaps it was done to delineate the old and new logging config in case someone wanted to parse the logs? If anyone knows how to make it continue to log to the same log file I’d love to know.

Of course this dynamic logging reconfiguration also means you can readily switch between normal and trace level logging config files to troubleshoot a problem and then switch back again quickly – all without a restart. Something like this:

* switch to trace log configuration;

proc iomoperate;

connect host='sas93lnx01' port=8561 user='sasadm@saspw' password='thesecretpassword';

set log cfg="/opt/ebiedieg/Lev1/SASMeta/MetadataServer/logconfig.trace.xml";

quit;

* >>> REPLICATE THE PROBLEM;

* switch back to normal log configuration;

proc iomoperate;

connect host='sas93lnx01' port=8561 user='sasadm@saspw' password='thesecretpassword';

set log cfg="/opt/ebiedieg/Lev1/SASMeta/MetadataServer/logconfig.xml";

quit;

For more info check out the following SAS documentation references:

- SAS® 9.3 Logging: Configuration and Programming Reference

- SAS® 9.3 Intelligence Platform: Application Server Administration Guide

Hi Paul,

Great post and I have just come across it due to some logging work I am completing at a London based trading firm and noted that you had posed a question in relation to the inclusion of _0, _1, _2, _n on the log file name when using Proc IOMOPERATE to set a new logging configuration file without having to restart the server.

Within the configuration xml file there are two parameter element name= attributes which control whether or not the _n increment is used with output log files, and it is worth noting the maximum n value of 32766 exists, namely Unique and Append.

The SAS 9.3 Logging: Configuration and Programming Reference states that:

—

name=”Unique” value=””TRUE | FALSE”

creates a new file, with an underscore and a unique number appended to the filename, if the log file already exists when logging begins. Numbers are assigned sequentially from 0 to 32766.

For example, suppose Events.log is specified in path-and-filename. If the files Events.log and Events.log_0 already exist, then the next log file that is created is named Events.log_1.

Default FALSE

Requirement This parameter is not required.

Interactions

If both the Unique parameter and the Append parameter are specified, then the Unique parameter takes precedence. If the log file already exists when logging begins, messages are logged as follows:

If Unique is set to TRUE and Append is set to either TRUE or FALSE, then messages are written to a new file with a unique number appended to the filename.

If Unique is set to FALSE and Append is set to TRUE, then messages are appended to the end of the existing file.

If Unique is set to FALSE and Append is set to FALSE, then the contents of the existing file are erased and overwritten with new messages.

—

Based on the above interaction examples then the following would need to be included in your xml configuration file for the relevant appender definition:

Cheers,

Matt

Hi Matt,

Thanks for your comment and also for answering that question. Now you point it out, I don’t know why I didn’t think of that for myself – I’ve seen that parameter many times already :) I think I’d rather use Unique=FALSE, Append=TRUE or Unique=FALSE, Append=TRUE|FALSE to avoid losing any log contents. Furthermore Unique=FALSE, Append=TRUE is probably only worthwhile (for me) when the log line format remains the same (and I’m just configuring another appender or tweaking loggers), otherwise parsing code would need to handle the change in format part way through a log file. After this consideration, the installed default of unique=TRUE now sounds pretty sensible to me.

Once again thanks for the explanation.

Cheers

Paul