I ran into an interesting problem over the last few days with my SAS® 9.3 deployments on Linux. I had noticed that the sas.servers scripts for my Lev2 and Lev3 deployments were taking several minutes to start the SAS services. I know JBoss takes ages to start but usually the SAS services start really quickly. I had assumed Remote Services was the culprit, but surprisingly it turned out to be starting the SAS/CONNECT Job Spawner service that was the problem. Even more surprisingly the underlying cause turned out to be the use of the hostname in the log filename where the deployment logical hostname alias used during deployment was different to the physical hostname of the machine it was deployed on.

I ran into an interesting problem over the last few days with my SAS® 9.3 deployments on Linux. I had noticed that the sas.servers scripts for my Lev2 and Lev3 deployments were taking several minutes to start the SAS services. I know JBoss takes ages to start but usually the SAS services start really quickly. I had assumed Remote Services was the culprit, but surprisingly it turned out to be starting the SAS/CONNECT Job Spawner service that was the problem. Even more surprisingly the underlying cause turned out to be the use of the hostname in the log filename where the deployment logical hostname alias used during deployment was different to the physical hostname of the machine it was deployed on.

To start the troubleshooting process I modified the sas.servers script to add some timing information to the console log messages it generated. Editing the sas.servers script is not necessarily the best option but more on that later. The sas.servers script has a function called logmsg which seems to have been very nicely provided in order to customize its logging. I wanted to make a simple change to add a time stamp so I could see where all the time was being spent. I changed the function from this (reformatted and comments omitted for brevity):

logmsg() {

echo "$*"

}

… to this:

logmsg() {

DT=`date +%Y-%m-%d:%H:%M:%S`

echo "$DT: $*"

}

I stopped the services since they were already running:

root@sas93lnx01:~# cd /opt/ebiedieg/Lev3

root@sas93lnx01:/opt/ebiedieg/Lev3# ./sas.servers stop

2011-10-30:21:51:31: Stopping SAS servers

Then checked they were all stopped:

root@sas93lnx01:/opt/ebiedieg/Lev3# ./sas.servers status

2011-10-30:21:51:59: SAS servers status:

2011-10-30:21:51:59: SAS Metadata Server 1 is NOT up

2011-10-30:21:51:59: SAS OLAP Server 1 is NOT up

2011-10-30:21:51:59: SAS Object Spawner 1 is NOT up

2011-10-30:21:51:59: SAS Share Server 1 is NOT up

2011-10-30:21:51:59: SAS CONNECT Spawner 1 is NOT up

2011-10-30:21:51:59: SAS Remote Services 1 is NOT up

2011-10-30:21:51:59: SAS Framework Data Server 1 is NOT up

Once stopped, I started them again so I could see where all the time was spent:

root@sas93lnx01:/opt/ebiedieg/Lev3# ./sas.servers start

2011-10-30:21:54:54: Starting SAS servers

2011-10-30:21:55:03: SAS Metadata Server 1 is UP

2011-10-30:21:55:07: SAS OLAP Server 1 is UP

2011-10-30:21:55:11: SAS Object Spawner 1 is UP

2011-10-30:21:55:11: SAS Share Server 1 is UP

2011-10-30:21:56:12: SAS CONNECT Spawner 1 is NOT up

2011-10-30:21:56:28: SAS Remote Services 1 is UP

2011-10-30:21:56:32: SAS Framework Data Server 1 is UP

Total time to start was 98 seconds. Not too bad really but it should be quicker than that. Everything seemed to start within a few seconds of each other except for the the SAS/CONNECT Job Spawner! It looked like I was wrong to assume it was Remote Services. The SAS/CONNECT Job Spawner appeared to be taking 60 seconds to apparently not start. It had started though. This was confirmed by looking in the process list and the job spawner log file. It all seemed very odd and for 2 reasons: firstly the job spawner is usually very fast to start and secondly 60 seconds is a bit of a convenient number; it sounded like a timeout.

The sas.servers script starts each SAS service in turn waiting for the service to become available before starting the next one. It sounded like sas.servers was waiting for some event from the job spawner and gave up after 60 seconds assuming it had not started. Time to dig into the sas.servers script a bit more.

The SAS/CONNECT job spawner is started by the sas.servers script in the start_connect_spawner function. It spawns the spawner (ConnectSpawner.sh start) in the background and then calls the is_atypical_server_up function to wait for it to finish starting. Looking into the is_atypical_server_up function it seems to repeatedly sleep and grep the job spawner log for some trigger text. Effectively:

grep "SAS Job Spawner for Open Systems" /opt/ebiedieg/Lev3/ConnectSpawner/Logs/ConnectSpawner_sas93lnx01.log

Now I could see the problem. It was scanning a non-existant log file ConnectSpawner_sas93lnx01.log when it should be scanning the ConnectSpawner_sas93lnx03.log file which was actually being written to by the job spawner. The log file name contained the wrong hostname. The name sas93lnx01 was the physical name of the machine, but sas93lnx03 was the host name alias I used when deploying the Lev3 environment. I prefer to use host name aliases in deployments for the benefits they provide in being able to easily move deployments between physical machines and provide disaster recovery options. In this case the Lev3 environment was currently on the same machine as the Lev1 environment, but I knew one day I would move it onto another machine so had used a host name alias sas93lnx03 (a DNS CNAME record) which I could easily redirect later to the appropriate physical machine name (DNS A record).

There was the problem. The SAS/CONNECT job spawner was using the deployment/logical hostname alias whereas the sas.servers script was using the physical hostname (which it got from `uname -n`). With the wrong log file name it could never find the log file and therefore never see the trigger text and would always time out after 60 seconds.

There were at least two ways I could fix this problem. The first was to modify the ConnectSpawner.sh script to use the physical host name, rather than deployment host name alias, in the log file name. The second was to modify the sas.servers script to use the deployment hostname alias instead of the physical host name. That was the option I chose. It was the slightly more difficult of the two but I didn’t feel right telling the job spawner to log to a file with the wrong name :)

These were the changes I made to sas.servers to get it to work. Firstly I added an extra line where the SHOSTNAME environment variable is derived, changing this:

SHOSTNAME=`uname -n`

… to this:

SHOSTNAME=`uname -n`

SHOSTNAME_ALIAS="sas93lnx03"

Secondly I modified the start_connect_spawner function to change the name of the log file (as checked by the is_atypical_server_up function) from this:

SLOGNAME="ConnectSpawner_${SHOSTNAME}"

… to this:

SLOGNAME="ConnectSpawner_${SHOSTNAME_ALIAS}"

As it happens, the same problem occurs with the deployment tester server component too so I also edited the start_deployment_testsrv function changing this:

SLOGNAME="DeploymentTesterServer_${SHOSTNAME}"

… to this:

SLOGNAME="DeploymentTesterServer_${SHOSTNAME_ALIAS}"

However, there is a potential problem with this method of editing sas.servers directly; I alluded to it earlier in the post. The sas.servers script is a generated script and any edits made to it will get wiped out if/when it is regenerated at a later date. The SAS documentation for the sas.servers script cautions against editing the script. The sas.servers script is created by the generate_boot_scripts.sh script. Next time someone or something runs generate_boot_scripts.sh the changes to sas.servers will be lost.

To protect against this you could modify the templates that are used by generate_boot_scripts.sh. This is what I did. I made all the same changes described above in the single template /opt/ebiedieg/Lev3/Utilities/script_templates/sas.servers.mainlog so they would survive a regeneration. I wouldn’t necessarily recommend this though. It’s not a method documented by SAS Institute and I suspect these templates will most likely get changed at some point during a SAS software upgrade or hotfix. However, it works for me for the time being. Looking back it probably would have been better to just changed the ConnectSpawner.sh script to use the wrong hostname ;)

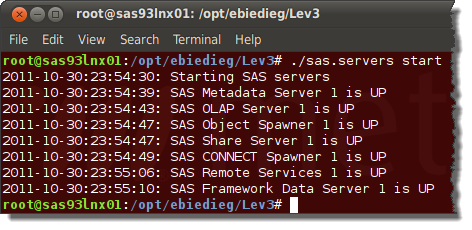

With those changes done it was time to stop and start the servers to see any improvements:

root@sas93lnx01:/opt/ebiedieg/Lev3# ./sas.servers start

2011-10-30:23:54:30: Starting SAS servers

2011-10-30:23:54:39: SAS Metadata Server 1 is UP

2011-10-30:23:54:43: SAS OLAP Server 1 is UP

2011-10-30:23:54:47: SAS Object Spawner 1 is UP

2011-10-30:23:54:47: SAS Share Server 1 is UP

2011-10-30:23:54:49: SAS CONNECT Spawner 1 is UP

2011-10-30:23:55:06: SAS Remote Services 1 is UP

2011-10-30:23:55:10: SAS Framework Data Server 1 is UP

This time only 40 seconds (less than half the original time) and the SAS CONNECT Job Spawner is now reported as being up.

As it turns out, I noticed that several of the other SAS services (like the metadata server) use the physical host name in their log filenames. It all works fine but I would have preferred they used the deployment hostname if it were possible. I briefly looked into ways of telling those servers to use the logical hostname alias instead but, since they use the SAS logging framework and the %S{hostname} conversion pattern, I couldn’t see any obvious way other than editing all of the config files and scripts post deployment. If someone knows a good way of consistently and automatically using the deployment hostname (the one providing during deployment and potentially different from the physical hostname) during installation then I’m all ears :)