Update 26Sep2018: This post is now several years old and naturally technology and security have progressed in that time. For more up to date information regarding delegation and, in particular, the requirement for constrained delegation when working with Windows Defender Credential Guard in Windows 10 and Windows Server 2016, please see Stuart Rogers’ very useful SAS Global Forum 2018 Paper: SAS 9.4 on Microsoft Windows: Unleashing Kerberos on Apache Hadoop.

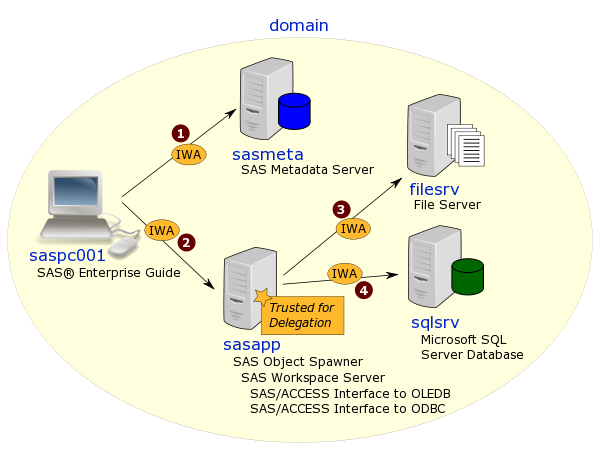

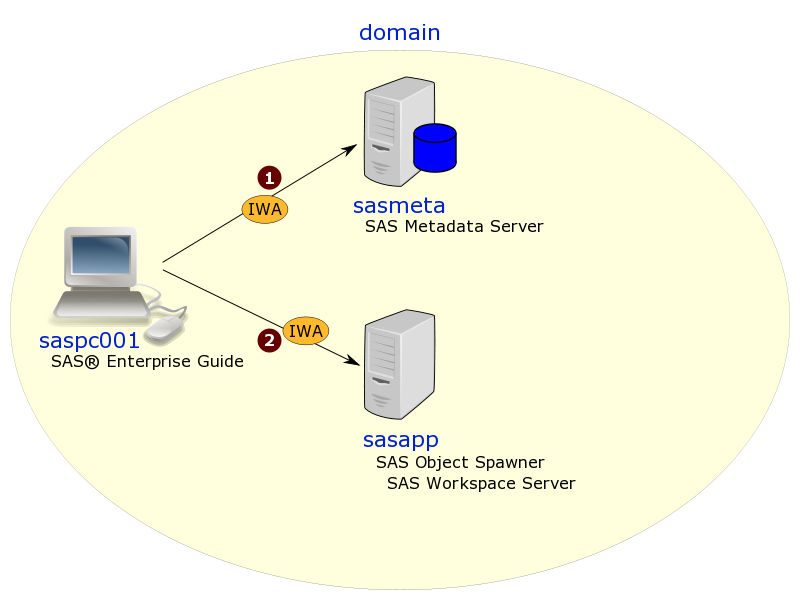

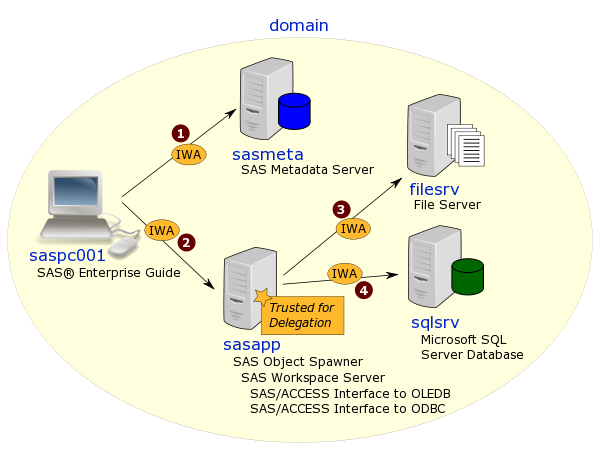

I mentioned in a previous post that host machines need to be Trusted for Delegation when a SAS® software component, such as a SAS Workspace Server, needs to make outgoing connections to secondary servers when the initial incoming connection was made using Integrated Windows Authentication (IWA).

When a server needs to be Trusted for Delegation, it takes a domain administrator to change the machine account in Active Directory. I rarely have domain admin privileges when working at customer sites so I usually can’t do this for myself. :( This post describes the method I use, as a lowly domain user, to verify that a Windows server has been configured in Active Directory as Trusted for Delegation.

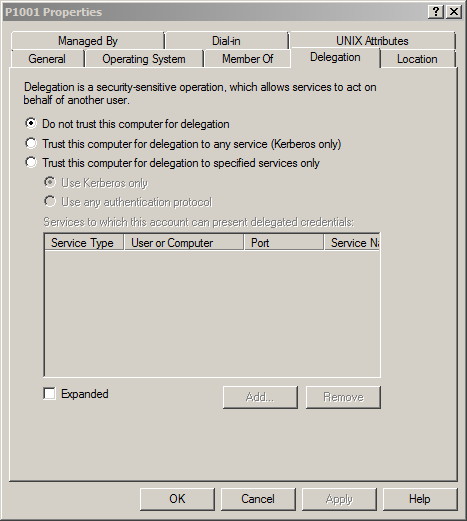

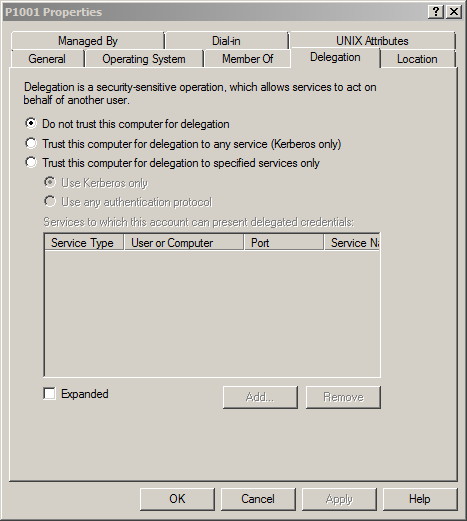

The screenshot below shows an example of what the domain admin might see in the Properties dialog Delegation tab for the machine account in Active Directory (via the Active Directory Users and Computers tool (under Start > All Programs > Administrative Tools).

This machine account (P1001) is not yet trusted for delegation. The domain admin would click the radio button for “Trust this computer for delegation to any service (Kerberos only)“.

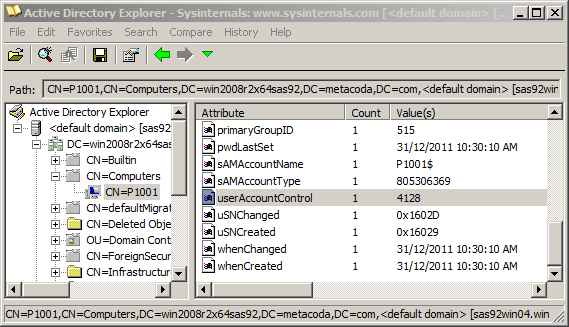

Once the domain admin has advised that the change has been applied, we can test it out from the SAS platform. What happens if the test still fails? I like to double check that the server is definitely trusted for delegation before I move on to checking other things. Everyone makes mistakes from time to time, even domain admins; maybe the wrong machine account was modified (it does happen). So to avoid wasting time later on, I like to verify this pre-requisite before moving on. I could ask the domain admin to email me a screenshot of the dialog to confirm, but they’re likely very busy people, so why not do it myself? I often don’t have access to the Active Directory Users and Computers tool so I have to find another way to verify trusted for delegation. This is where the very useful AdExplorer utility helps. It’s one of the SysInternals tools available for download from Microsoft. As the name suggests it provides an explorer interface to Active Directory so you can browse the objects and attributes.

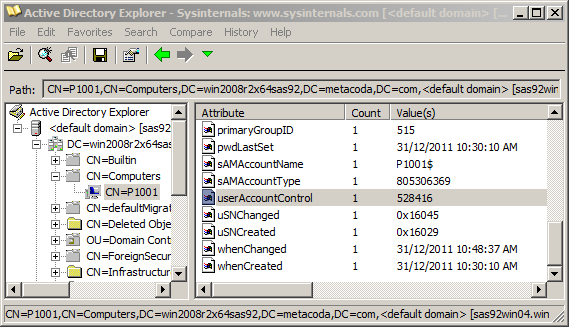

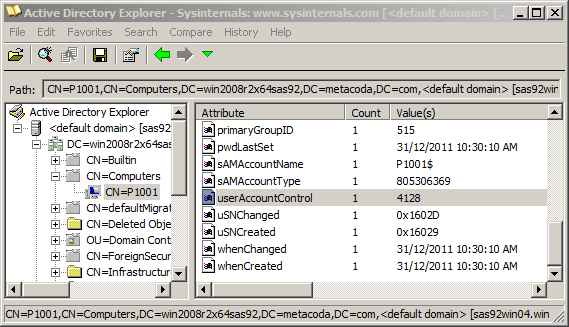

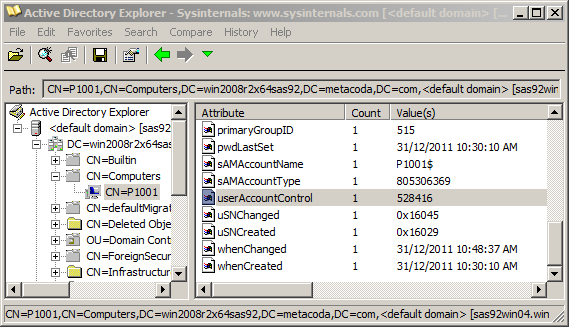

Here’s an AdExplorer screenshot showing the same machine account (P1001) from the dialog shown earlier.

I have selected the userAccessControl attribute and can see it has the value 4128. This is not good; I’ll explain why in a moment :) Essentially it means that the machine is not trusted for delegation. What I would rather see, is the next screenshot where it has the value 528416 meaning it is trusted for delegation.

So where do these magic numbers come from, and how do we know that 528416 is trusted and 4128 is not? The userAccessControl value is a bitmap value (or bit array). There are a number of possible flags that can be set in this value which are documented in the Microsoft resource How to use the UserAccountControl flags to manipulate user account properties. The main flag of interest here is TRUSTED_FOR_DELEGATION with value 0x80000 (hex) or 524288 (decimal) which is listed in the document as:

TRUSTED_FOR_DELEGATION – When this flag is set, the service account (the user or computer account) under which a service runs is trusted for Kerberos delegation. Any such service can impersonate a client requesting the service. To enable a service for Kerberos delegation, you must set this flag on the userAccountControl property of the service account.

The (trusted) decimal value 528416 (hex 0x81020) we saw above consists of TRUSTED_FOR_DELEGATION (decimal 524288 hex 0x80000) + WORKSTATION_TRUST_ACCOUNT (decimal 4096 hex 0x1000) + PASSWD_NOTREQD (decimal 32 hex 0x0020). The (untrusted) decimal value 4128 (hex 0x1020) we saw earlier only consists of WORKSTATION_TRUST_ACCOUNT (decimal hex 0x1000) + PASSWD_NOTREQD (decimal 32 hex 0x0020). It’s missing the TRUSTED_FOR_DELEGATION value. You might see other values in your environment, including other flag values, but the important thing to check is that it includes the TRUSTED_FOR_DELEGATION value.

If you know of any other ways to verify a server’s trusted for delegation status (as a normal domain user) please let me know by leaving a comment.

For more posts in this series have a look at the IWA tag.